What is Performance Testing?

Performance Testing is a non-functional software testing process performed to determine the system parameters in terms of Testing speed, Response Time, Scalability and Stability of a software application under a particular workload. Performance testing measures the quality attributes of the system, such as scalability, reliability and resource usage.

This is not about finding software bugs or defects. The main purpose of performance testing is to identify and eliminate the performance bottlenecks in the software application. It is a subset of performance engineering and also known as “Perf Testing”.

It mainly focuses on certain factors of a Software Program such as:

- Speed – Determines whether the application responds quickly

- Scalability – Determines the maximum user load the software application can handle.

- Stability – Determines if the application is stable under varying loads

Why Performance Testing is Required?

Performance Testing provides information about their application regarding speed, stability, and scalability. More importantly, it uncovers what needs to be improved before the product goes to market. Without that, the software might suffer from issues such as: running slow while several users use it simultaneously, inconsistencies across different operating systems and poor usability.

Performance testing determines whether their software meets speed, scalability, and stability requirements under expected workloads. Applications that are sent to market with poor performance metrics due to nonexistent or poor performance testing are likely to gain a bad reputation and fail to meet expected sales goals. These are a few of the reasons that depict the need for performance testing.

Objective of Performance Testing

The objective of performance testing is to simulate such behaviours ahead of a production release, and find such issues and spend time resolving them. Thus, early detection of performance issues can, in fact, save companies from any unwanted crash of a website, customer retention and consequently any revenue loss.

What is the difference between Performance Testing vs. Performance Engineering?

Performance testing and performance engineering are two closely related yet distinct terms. Performance Testing is a subset of Performance Engineering and is primarily concerned with gauging the current performance of an application under certain loads.

To meet the demands of rapid application delivery, modern software teams need a more evolved approach that goes beyond traditional performance testing and includes end-to-end, integrated performance engineering. Performance engineering is the testing and tuning of software in order to attain a defined performance goal. Performance engineering occurs much earlier in the software development process and seeks to proactively prevent performance problems from the get-go.

Factors Which Impact Performance

There are multiple factors that impact the performance of any software.

Network

Network speeds, firewall inefficiency, making queries to non-existent DNS server are examples of network-related performance issues.

Code Quality

Inefficient algorithms, memory leaks, deadlocks, incorrect code optimization are some of the code quality issues. Along with that, in data-centric applications, the choice of database and incorrect schema design can impact performance issues.

Traffic Spike/Load Distribution

Sudden traffic spikes can bring down applications if care is not taken to handle the surge in data. Similarly, incorrect implementation of load distributions when such traffic spikes occur can also give similar results.

Types of Performance Testing

Load Testing

Checks the application’s anticipated user loads. The objective is to identify performance bottlenecks before the software application goes live.

Stress Testing

Involves testing an application under extreme workloads to see how it handles high traffic or data processing. The objective is to identify the breaking point of an application

Scalability Testing

Checking the performance of an application by increasing or decreasing the load in particular scales (no of a user) is known as scalability testing. Upward scalability and downward scalability testing are called scalability testing.

Scalability testing is divided into two parts which are as follows:

- Upward scalability testing

- Downward scalability testing

Upward scalability testing

It is testing where we increase the number of users on a particular scale until we get a crash point. We will use upward scalability testing to find the maximum capacity of an application.

Downward scalability testing

The downward scalability testing is used when the load testing is not passed, then start decreasing the no. of users in a particular interval until the goal is achieved. So that it is easy to identify the bottleneck (bug).

Volume Testing

Under volume testing large no of data is populated in a database and the overall software system’s behaviour is monitored. The objective is to check software application’s performance under varying database volumes.

Volume is a Capacity while Load is a Quantity, i.e., Load testing means no. of users, and volume testing means the amount of data.

Endurance Testing

Is done to make sure the software can handle the expected load over a long period of time

Spike Testing

Tests the software’s reaction to sudden large spikes in the load generated by users.

Performance Testing Process

The methodology adopted for performance testing can vary widely but the objective for performance tests remains the same. It can help demonstrate that your software system meets certain pre-defined performance criteria. Or it can help compare the performance of two software systems. It can also help identify parts of your software system which degrade its performance.

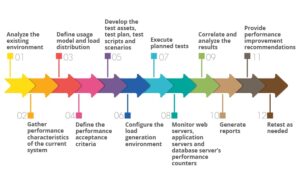

Generic process on how to perform performance testing

Identify your testing environment

Know your physical test environment, production environment and what testing tools are available. Understand details of the hardware, software and network configurations used during testing before you begin the testing process. It will help testers create more efficient tests. It will also help identify possible challenges that testers may encounter during the performance testing procedures.

Identify the performance acceptance criteria

This includes goals and constraints for throughput, response times and resource allocation. It is also necessary to identify project success criteria outside of these goals and constraints. Testers should be empowered to set performance criteria and goals because often the project specifications will not include a wide enough variety of performance benchmarks. Sometimes there maybe none at all. When possible, finding a similar application to compare to is a good way to set performance goals.

Plan & design performance tests

Determine how usage is likely to vary amongst end-users and identify key scenarios to test for all possible use cases. It is necessary to simulate a variety of end-users, plan performance test data and outline what metrics will be gathered.

Configuring the test environment

Prepare the testing environment before execution. Also, arrange tools and other resources.

Implement test design

Create the performance tests according to your test design.

Run the tests

Execute and monitor the tests.

Analyze, tune and retest

Consolidate, analyze and share test results. Then fine-tune and test again to see if there is an improvement or decrease in performance. Since improvements generally grow smaller with each retest, stop when bottlenecking is caused by the CPU. Then you may have the consider option of increasing CPU power.

Tips for Performance Testing

(1) Create a testing environment that mirrors the production ecosystem as closely as possible. Without that, the test results may not be an accurate representation of the application’s performance when it goes live.

- Separate the performance testing environment from the UAT environment.

- Identify test tools that best automate your performance testing plan.

- Run tests several times to obtain an accurate measure of the application’s performance. If you are running a load test, for instance, run the same test multiple times to determine whether the outcome is consistent before you mark the performance as acceptable or unacceptable.

- Do not make changes to the testing environment between tests.

(2) Pick user-centric software performance testing metrics

(3) Dive into functional testing and non-functional testing approaches

(4) Cloud application performance hinges on a solid testing plan

(5) Cloud performance testing is necessary for app migration

(6) Find the right software testing methods for your dev process

Performance Testing Metrics: Parameters Monitored

The basic parameters monitored during performance testing include:

Performance Testing Metrics | Description

|

| Processor Usage | An amount of time the processor spends executing non-idle threads. |

| Bandwidth | Shows the bits per second used by a network interface |

| Memory use | Amount of physical memory available to processes on a computer. |

| Response time | Time from when a user enters a request until the first character of the response is received |

| Memory pages/second | The number of pages written to or read from the disk in order to resolve hard page faults. Hard page faults are when code not from the current working set is called up from elsewhere and retrieved from a disk. |

| Disk time | Amount of time disk is busy executing a read or write request |

| CPU interrupts per second | Is the avg. number of hardware interrupts a processor is receiving and processing each second |

| Page faults/second | The overall rate at which fault pages are processed by the processor. This again occurs when a process requires code from outside its working set |

| Thread counts | An applications health can be measured by the no. of threads that are running and currently active |

| Private bytes | The number of bytes a process has allocated that can’t be shared amongst other processes. These are used to measure memory leaks and usage. |

| Throughput | Rate a computer or network receives requests per second |

| Committed memory | Amount of virtual memory used |

| Rollback segment | The amount of data that can rollback at any point in time. |

| Garbage collection | It has to do with returning unused memory back to the system. Garbage collection needs to be monitored for efficiency |

| Maximum active sessions | The maximum number of sessions that can be active at once. |

| Database locks

| locking of tables and databases needs to be monitored and carefully tuned. |

| Hit ratios | This has to do with the number of SQL statements that are handled by cached data instead of expensive I/O operations. This is a good place to start solving bottlenecking issues. |

| Amount of connection pooling

| The number of user requests that are met by pooled connections. The more requests met by connections in the pool, the better the performance will be. |

| Network output queue length | Length of the output packet queue in packets. Anything more than two means a delay and bottlenecking needs to be stopped. |

| Top waits

| Are monitored to determine what wait times can be cut down when dealing with how fast data is retrieved from memory |

| Disk queue length | Is the avg. no. of read and write requests queued for the selected disk during a sample interval |

| Network bytes total per second | The rate at which bytes are sent and received on the interface including framing characters |

Performance Testing Challenges

Some challenges within performance testing are as follows:

- Some tools may only support web applications.

- Free variants of tools may not work as well as paid variants, and some paid tools may be expensive.

- Tools may have limited compatibility.

- It can be difficult to test complex applications for some tools.

- Response time issue

- Organizations should also watch out for performance bottlenecks such as CPU, memory and network utilization. Disk usage and limitations of operating systems also should be watched out for.

Performance Test Tools

There are a wide variety of performance testing tools available in the market. The tool you choose for testing will depend on many factors such as types of the protocol supported, license cost, hardware requirements, platform support etc.

Below is a list of popularly used testing tools,

- LoadNinja – Is revolutionizing the way we load test. This cloud-based load testing tool empowers teams to record & instantly playback comprehensive load tests, without complex dynamic correlation & run these load tests in real browsers at scale. Teams can increase test coverage & cut load testing time by over 60%.

- WebLoad – Combines performance, scalability, and integrity as a single process for the verification of web and mobile applications.

- HP LoadRunner – Is the most popular performance testing tools on the market today. This tool is capable of simulating hundreds of thousands of users, putting applications under real-life loads to determine their behaviour under expected loads.

- LoadRunner – Features a virtual user generator that simulates the actions of live human users.

- Jmeter – Is an Apache performance testing tool (one of the leading tools used for load testing of web and application servers), can generate load tests on web and application services. JMeter plugins provide flexibility in load testing and cover areas such as graphs, thread groups, timers, functions and logic controllers. JMeter supports an integrated development environment (IDE) for test recording for browsers or web applications, as well as a command-line mode for load testing Java-based operating systems.